Unity Neural Network Rendering

Introduction & Motivation

With the emergence of AI and its increasing importance in the game industry, I believe the ability to build, modify and integrate AI into existing game pipelines will be the crucial skill for future technical artists due to our the interdisciplinary nature. My proficiency in AI theories and neural network programming positions me at the frontier of integrating AI with digital art.

I'm perhaps among the first group of technical artist in the world (!) that experiments on Neural Network Rendering in Unity as an Unity AI internal beta tester,

using the internal beta package Sentis. It is designed to be the neural network interface for Unity.

In the work I added a Tensorflow VAE neural network to the unity's default render pipeline, so that I could achieve arbitrary style transfer in real time

given a style reference image.

Model Architecture & Optimization

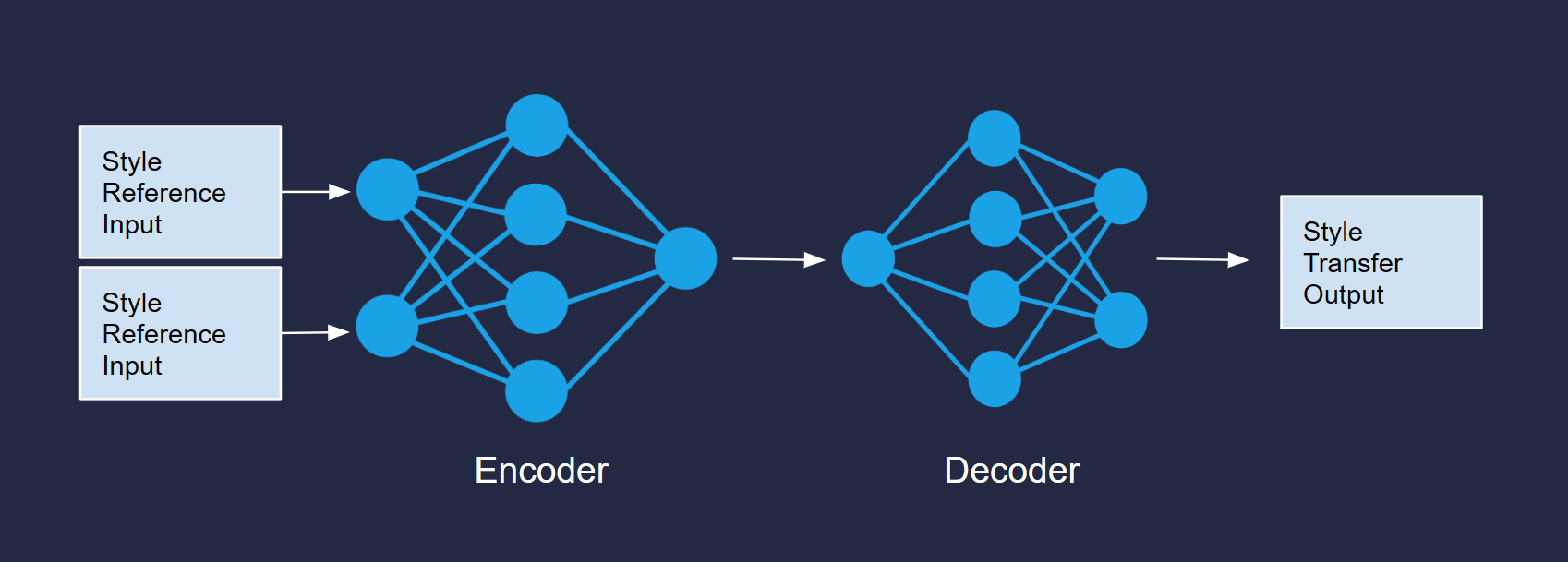

I edited the Tensorflow Style Transfer Model and recompiled it into Open Neural Network Exchange (.onnx) format, the format that Unity Supports. The model takes a source image (which will be the rendered scene in Unity), as well as a style reference image. The mode will transform the source image into the art style of the reference image. The Architecture of the model (with some abstraction) is shown on the right.

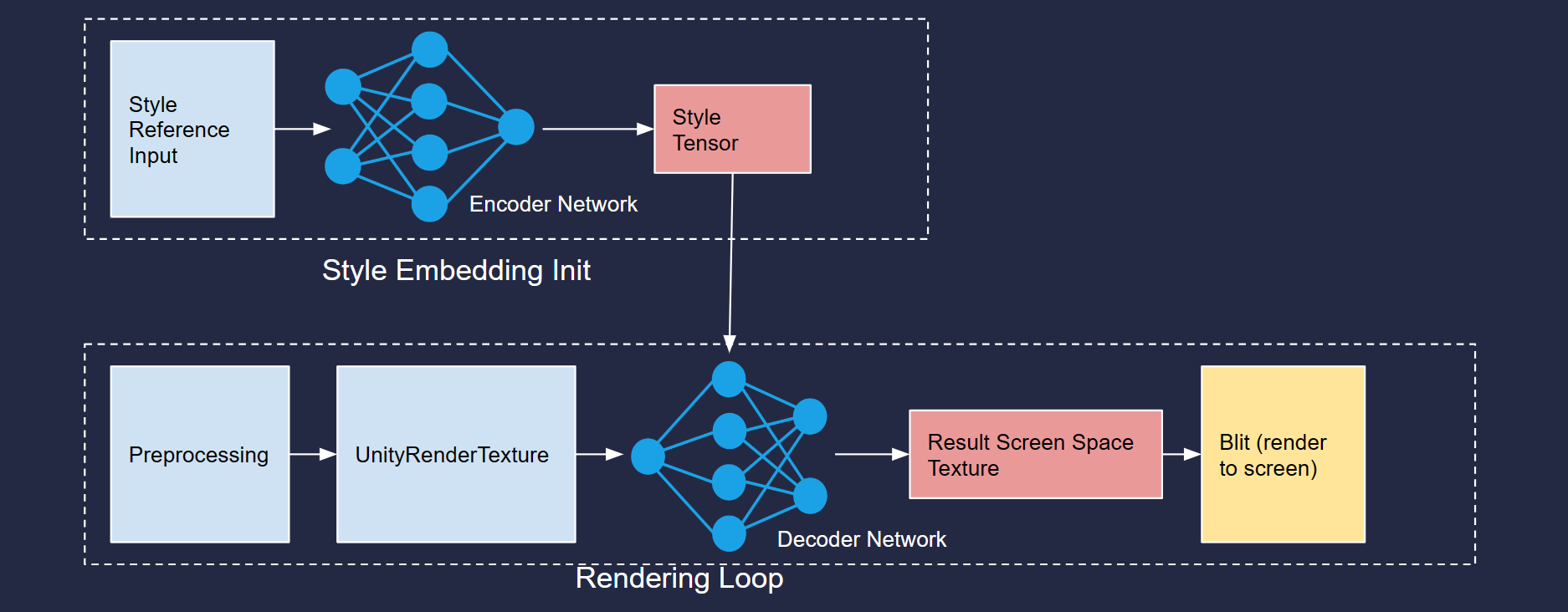

In the original architecture each full neural network query will execute a encoding of source + style reference, then a decoding network in every frame. Yet I noticed that as the style reference image is fixed, the embedding of the style should also be fixed in this specific model. Therefore the style reference encoding only need to be executed once at initialization, and the embedding can be saved in the model. It almost increases the speed by 30%!. Here is the modified architecture:

Unity Integration

Various step needs to be done to make the model run in Unity. I hereby pickup some key functions to demonstrate and explain:

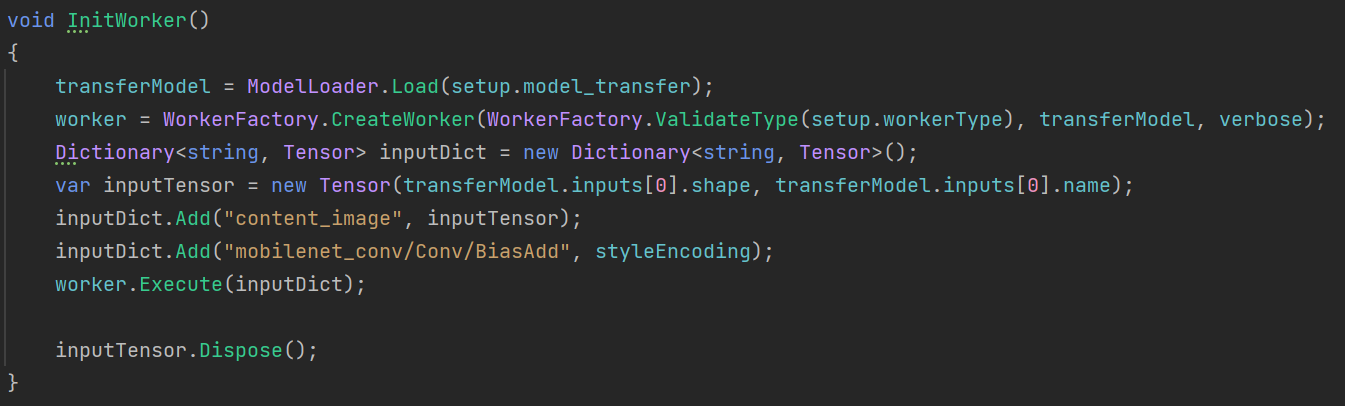

Creating Worker

Unity use a "worker" to build data pipeline between CPU and GPU, as well as manage asynchronous execution of GPU. The developer has to manually create and delete workers (Otherwise there will be memory leak!!). Here I created the worker with necessary parameters, and also run the worker once to initialize some internal data and memory allocation, a step recommended by Unity.

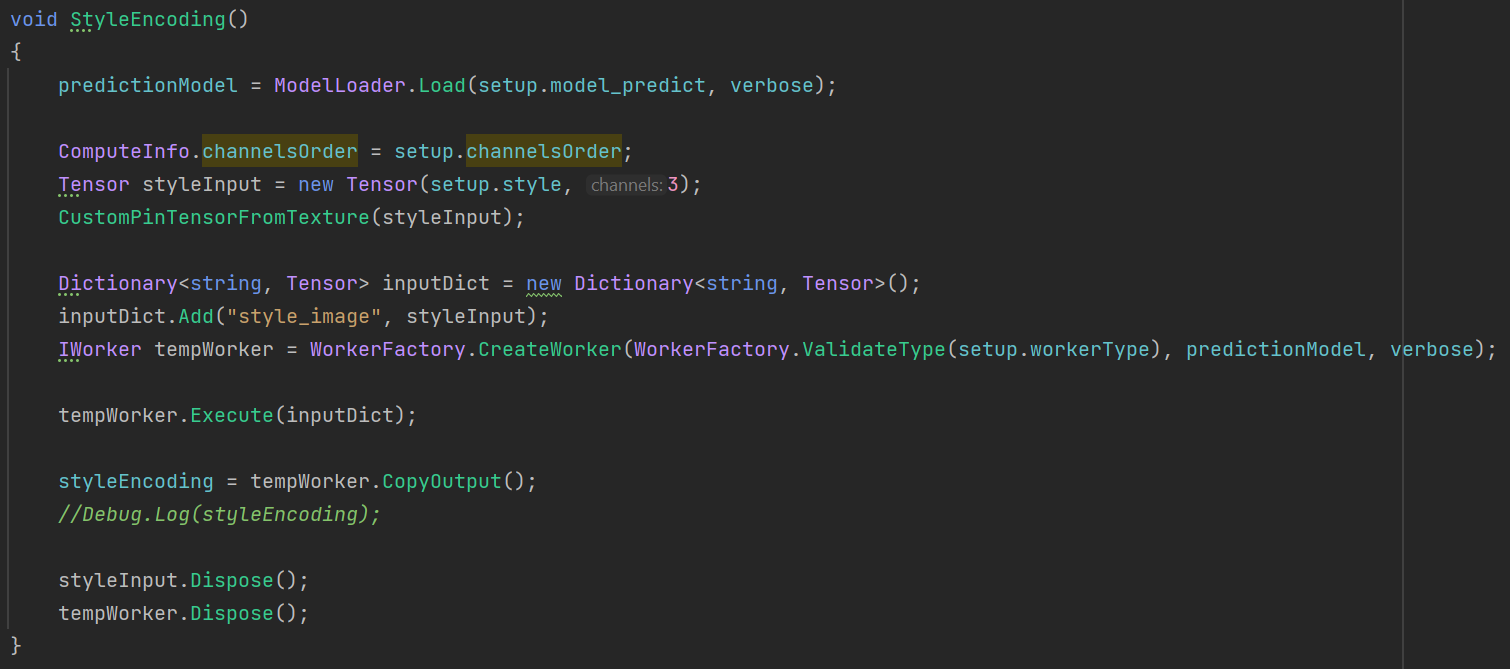

Style Encoding

This is where I calculate the style embedding at initialization and save it as intermediate data to accelerate the model query speed. The datatype for ML data in Unity is Sentis Tensor. Similar to workers, we also need to manually create and delete tensors with care to prevent memory leak and null pointer errors.

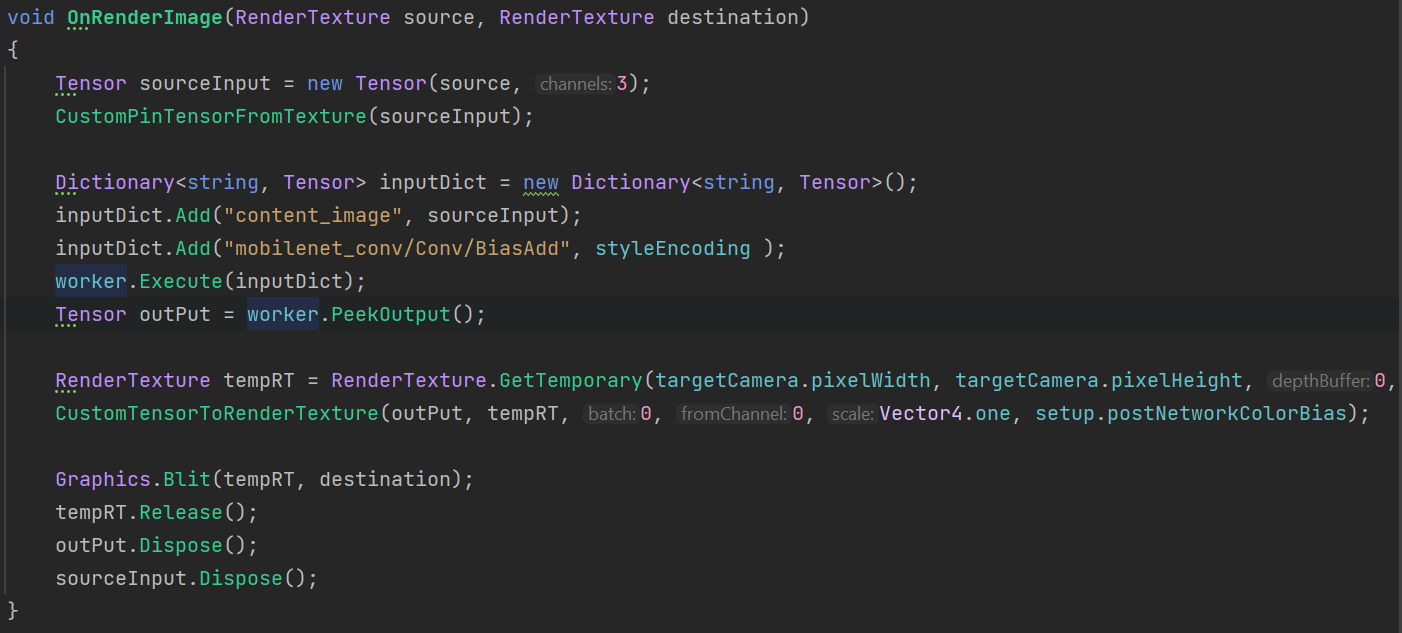

Realtime AI Query

The function is a Unity-defined function that, by overloading the function, we can create custom post processing effects in the default render pipeline. Here the rest encoder network and decoder network is merged into one that takes the source image and pre-calculated style embedding as inputs. The resulted tensor is processed as a texture then "blit" to the screen.

Demo

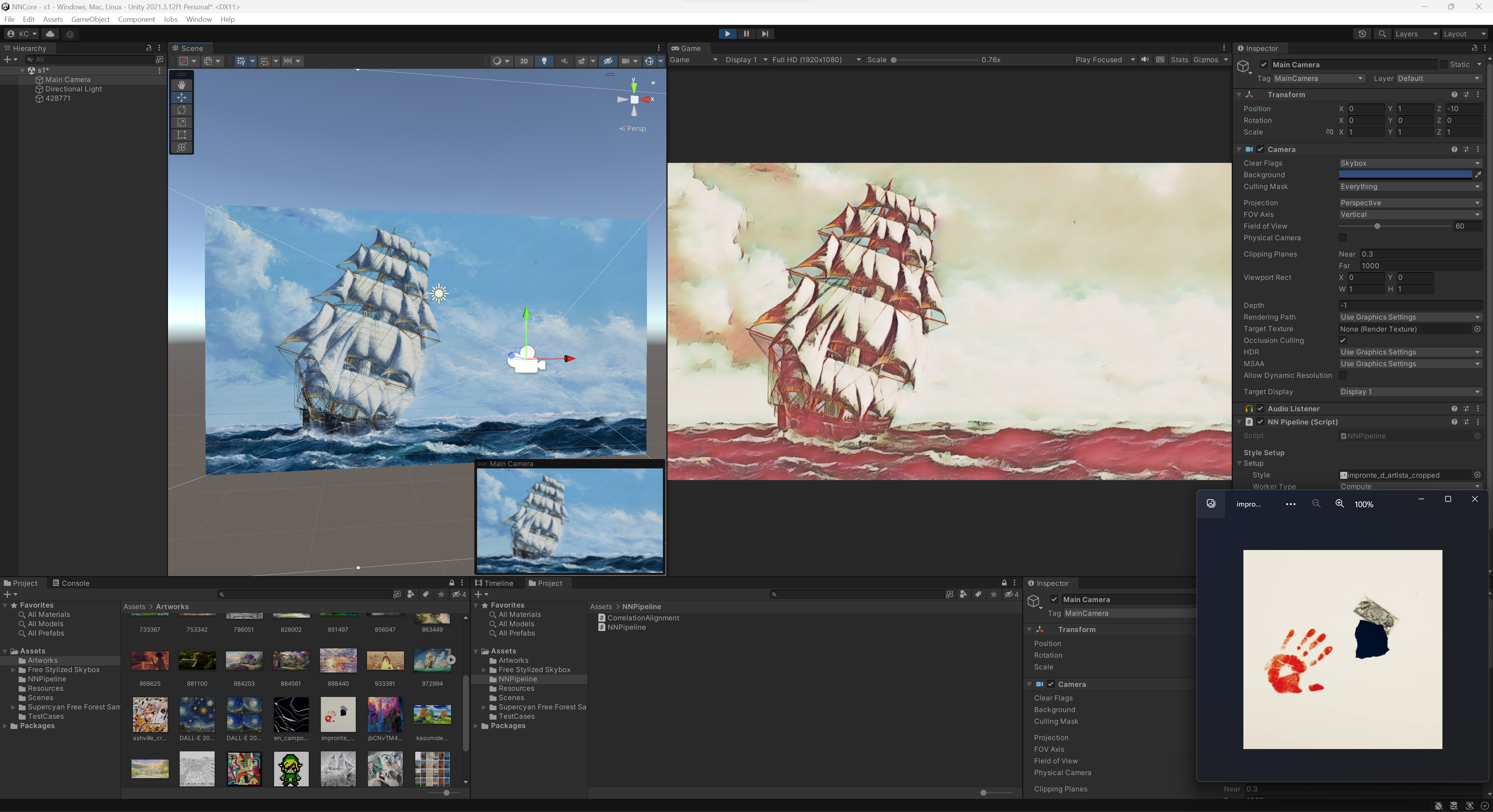

First I tried to render some static images on Canvas in Unity to make sure the model works. The left is the source image, the right is the out put image, and the image in the bottom right corner is the style reference:

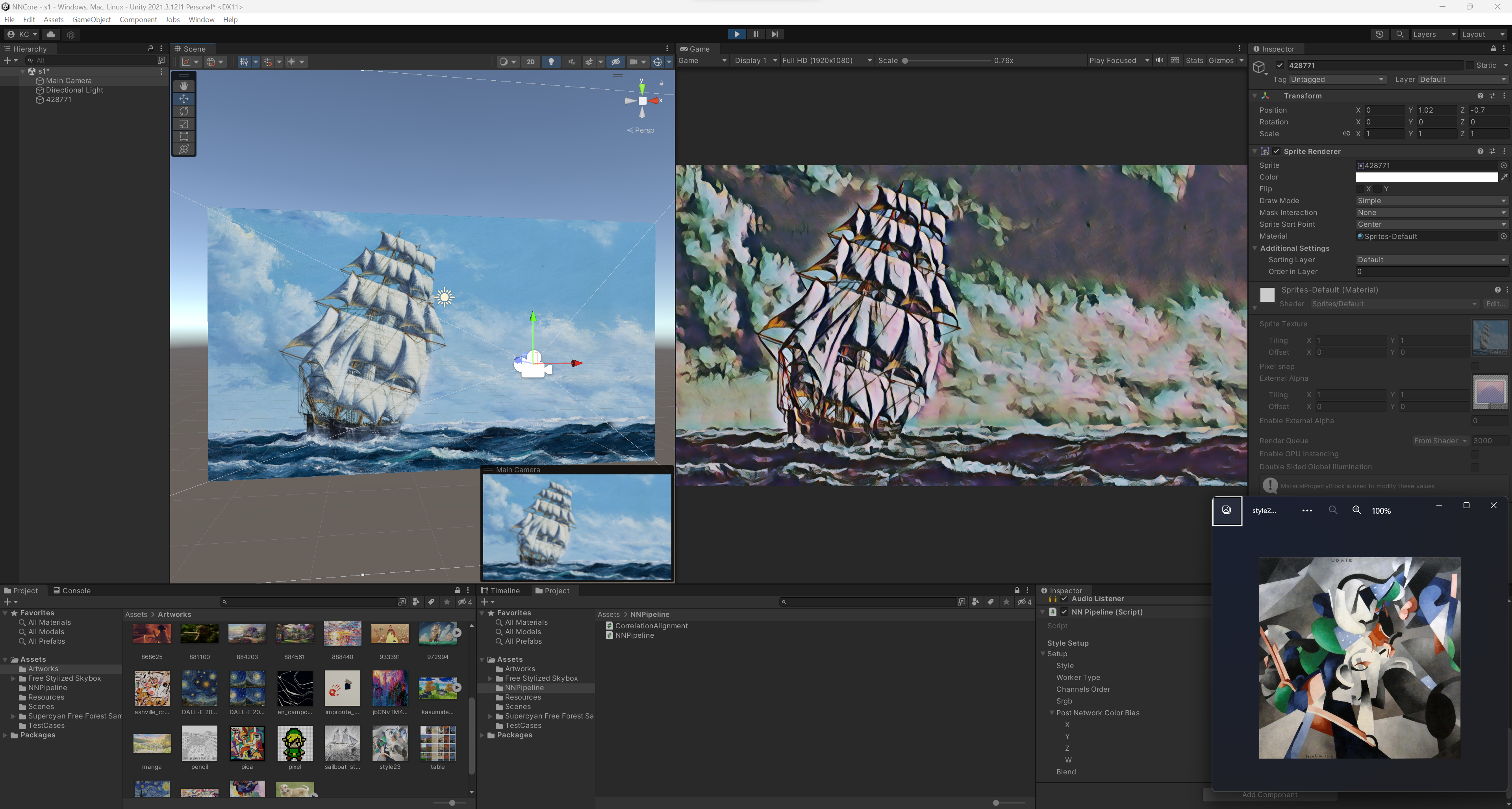

Then I tested the results in actual scenes with different styles references. I imported a simple mountain scene in Unity (credit to Unity asset store!):

Fin.